In order to help DBAs determine which tables and stored procedures might be good to take advantage of using the new In-Memory OLTP tables in SQL Server 2014, Microsoft introduced the AMR Utility. AMR stands for Analyze, Migrate, and Report. In this article I will be exploring how to use this tool.

What is the Purpose of the AMR Tool?

The AMR tool is a utility that reads captured workflow statistics to identify which tables and stored procedures would have the best performance gain if migrated to use In-Memory OLTP tables. This tool is available when you install SQL Server 2014 and set up the Management Data Warehouse. The AMR tool analyzes usage data that has been collected in the Management Data Warehouse. Therefore before you use this tool you will need to set up the Management Data Warehouse and turn on the “Transaction Performance Collection Sets” of data collectors.

These data collectors are very lightweight and can be installed on any SQL Server Instance that is running SQL Server 2008 or higher. These data collectors gather the statistics needed by the AMR tool. To understand how to set up the Management Data Warehouse and how to install these data collectors you can use the instructions in the “Configure Management Data Warehouse” section of the AMR documentation that can be found here: http://msdn.microsoft.com/en-us/library/dn205133(v=sql.120).aspx.

Setting up a Workload

Once I have set up the Management Data Warehouse and the Transaction Performance Collection Set of data collectors on my SQL Server 2014 instance I can start collecting some statistics. I will use these statistics to show you how the AMR tool provides reports to help DBA’s determine which disk based tables they might want to convert to In-Memory OLTP tables, or which stored procedures would be good candidates for porting over to be natively complied stored procedures.

The database I will be using for my examples is the AdventureWorks2012 database. Additionally I will be using a new SQL Server 2014 instance that has a freshly setup Management Data Warehouse database.

In order to show statistics for some simple stored procedures I will be creating a couple of test stored procedures that I will place in the AdventureWorks2012 sample database. Here is the script I used to create these test stored procedures:

USE [AdventureWorks2012] GO CREATE PROC [dbo].[InMemoryTst1] AS SELECT TOP 1 * FROM [AdventureWorks2012].[Person].[Address]; GO CREATE PROC [dbo].[InMemoryTst2] AS SELECT TOP 1 * FROM [AdventureWorks2012].[Person].[Person]; GO

After I run the above script to create these test stored procedures, I then verify my data collectors are running and then ran the following script to capture some data collector workflow statistics:

EXEC [dbo].[InMemoryTst1]; GO 45 Exec [dbo].[InMemoryTst2]; GO 45 SELECT * FROM [HumanResources].[Employee]; GO 45

After running this workload I’m ready to see what the AMR tool tells me about my AdventureWorks2012 database tables and stored procedures. Keep in mind I have a fresh Management Data Warehouse, so we can look at the reports produced based on the workload I just ran. When you are capturing statistics against your databases, you will want to gather statistics against a realistic workload. This might mean you need to collect statistics for a few hours or even days to capture a complete workload for your environment.

Loading the Current Data Collection Statistics into the Management Data Warehouse

Now that I have run my workflow I’m ready to start reporting. But wait, it takes some time for the data collector stats load into the Management Data warehouse, due to the schedule of the SQL Agent jobs that collect the data collection stats. Therefore before using the AMR tool I need to make sure that the workload statistics I captured actually got uploaded to the Management Data Warehouse. To make sure this happened there are a number of ways to get the currently collected data collection stats into the Management Data Warehouse. One of those ways is to right click on the “Stored Procedure Usage Analysis” and “Table Usage Analysis” data collection items in Object Explorer and select the “Collect and Upload Now” options. By doing this it will fire the SQL Server Agent jobs to load the latest statistics collected into my Management Data Warehouse.

Reviewing the AMR Tool Reports

The AMR tool helps you determine which tables or stored procedures you should migrate to use the new In-Memory OLTP tables available with SQL Server 2014. It does this by analyzing data collector statistics stored in the Management Data Warehouse. By using the AMR tool you can identify the performance improvement you will potentially see by migrating each identified table to an In-Memory OLTP table or each stored procedure to be a natively compiled stored procedure.

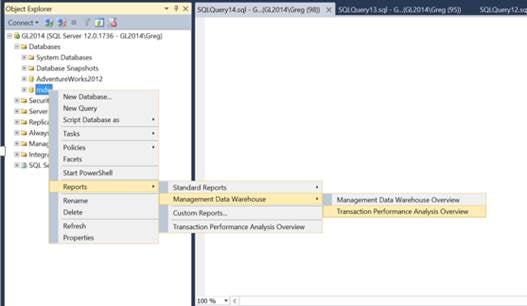

To bring up the AMR Reports I right click on my Management Data Warehouse, which is “mdw” in my case, and then select the “Report” item from the drop down menu. I then select the “Management Data Warehouse” from the Report submenu, and then finally click on the “Transaction Performance Analysis Overview” item as shown below:

Transaction Performance Analysis Overview

Upon doing this the following report will be displayed in the query window:

Transaction Performance Analysis Overview Report

On this screen you can see there are two different hyperlinks under Table Analysis: Usage Analysis and Contention Analysis. Plus one hyperlink under Stored Procedure Analysis. These hyperlinks identify the last time the data collector statistics where loaded. Let me explore the AMR reports under each of these hyperlinks.

When I click on the Usage Analysis hyperlink under Table Analysis the following report is displayed in a query window:

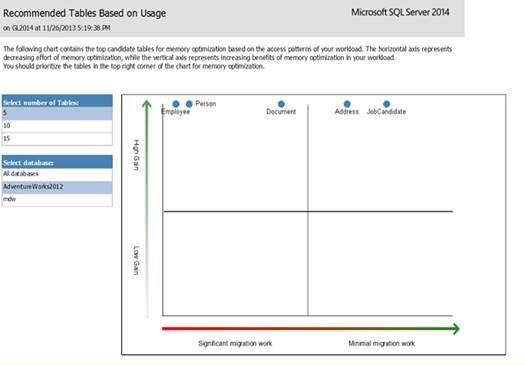

Recommended Tables Based on Usage

This report shows the candidate tables that I should consider migrating to In-Memory OLTP storage based on usage across my entire instance. Note there are two different selection items boxes on the left and a four quadrant chart on the right. The screen shot above is showing the top 5 tables in all databases on my instance that I should consider migrating to In-Memory tables. By clicking on the “AdventureWorks2012” database in the “Select Database” box on the left I can get a report of just the candidate tables from the AdventureWorks2012 database. When I click on the “AdventureWorks2012” item in the “Select Database” box the following screen is displayed:

AdventureWorks2012

On this screen you can see this graph shows 4 different quadrants that only contain tables from the AdventureWorks2012 database. The tables that are shown in the upper left quadrant are the easiest to migrate to In-Memory OLTP tables that will have the highest performance benefit. In this case it would be the Address and JobCandidate tables. You can get more detailed information about the performances gains your application might see using In-Memory OLTP tables by clicking on one of these tables. When I click on the Address table the following detailed report is displayed in another query window:

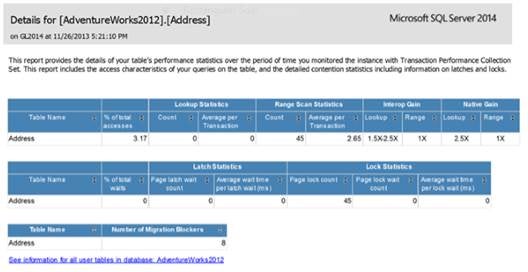

Address Table Report

Here you can see a number of different performance statistics have been displayed. If you look under the “Range Scan Statistics” you can see a “Count” column with a value of 45. This shows that I have executed a query against this table 45 time. This is exactly the number of times the stored procedure InMemoryTst1 executed the following SELECT statement:

SELECT TOP 1 * FROM [AdventureWorks2012].[Person].[Address];

The “Lookup Statistics” and “Range Scan” statistics sections of this report represents the number of lookups, or range scans that where done against the Address table for my workload. The “InterOp Gains” and “Native Gain” section of the above report show the estimated amount of performances gains I would get from my workflow if I was to convert the Address table to an In-Memory OLTP table. By using the gain numbers I can determine if I should convert this table to an In-Memory OTLP table and whether or not I should create HASH or RANGE indexes on the Address table. This report also shows the number of locks and latches that were taken during my workload. If I was to convert the Address table to an In-Memory OLTP table I would be able to eliminate all the latches and locks because In-Memory OLTP tables are latch and lock free table structures.

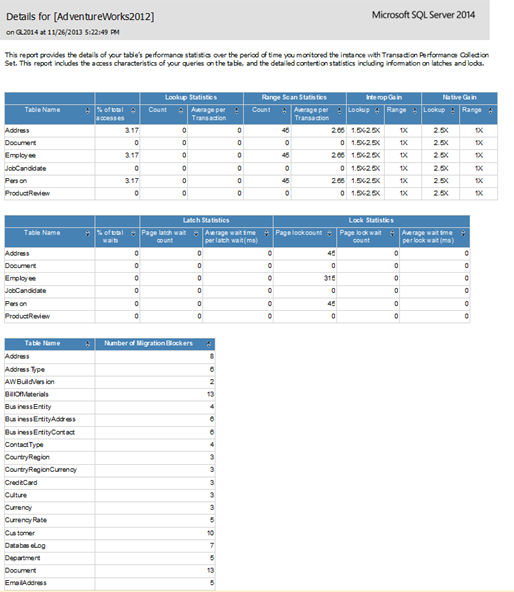

If I should want to see a single report that shows all these statistics for all the tables in the AdventureWorks2012 database I could click on the hyperlink at the bottom of the above report. When I clicked on this link the following report was displayed:

Single Report that Shows All Statistics for All Tables

By looking at this report you can see statistics that the Management Data Warehouse collected for my AdventureWorks2012 tables.

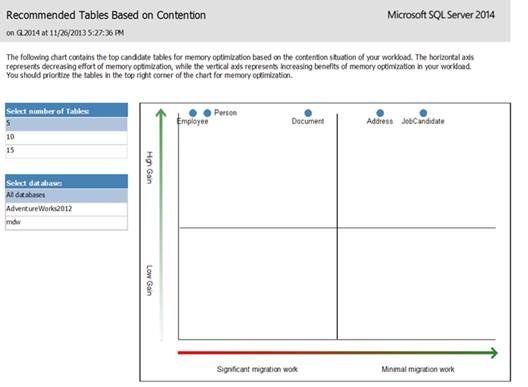

Let’s review the reports that are available when you click on the hyperlink under the “Contention Analysis” section of the “Transaction Analysis Performance Overview” report (first report shown above). When I click on this hyperlink the following report is displayed:

Contention Analysis Report

As you can see this report looks very similar to the “Recommended Tables Based on Usage” report I already shown. If I was to click on the Address table in the above report I would get the exact same report as shown in the “Details for [AdventureWorks2012].[Address}” report shown above.

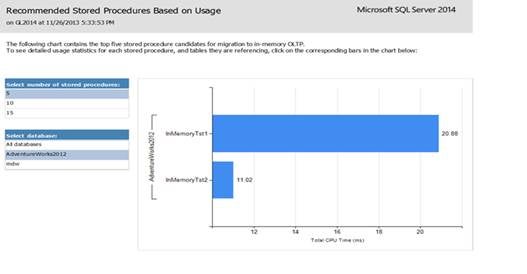

The next set of reports I want to show you are those reports you can get to by clicking on the hyperlink on the “Stored Procedure Analysis Usage Analysis” item in the “Transaction Analysis Performance Overview” report. When I clicked on this hyperlink the following report is displayed in a query window:

Stored Procedure Analysis Usage Analysis

By reviewing this report you can see it shows some statistics for the top five stored procedures at the instance level. If I want to review statistics for just the AdventureWorks2012 database I can click on the “AdventureWorks2012” item in the “Select Databases” box on the left. When I do this the following statistics for the AdventureWorks2012 stored procedures are displayed:

Top Five Stored Procedure Candidates

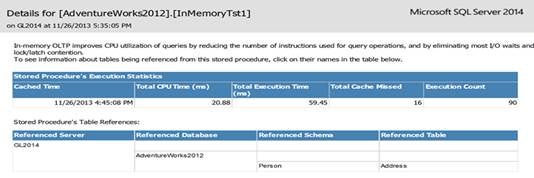

In this report you can see the “Total CPU Time (ms)” used by the two stored procedures in my workload. If want to drill down and look at statistics for the InMemoryTst1 stored procedure I just need to click on the blue bar next the InMemoryTst1 label. When I do that the following report is displayed:

Drill Down and Look at Statistics for the InMemoryTst1 Stored Procedure

In this report you can see a number of statistics related to my InMemoryTst1 stored procedure. This report also shows you the tables referenced in this stored procedure. You can use this information to determine if you should convert this stored procedure into a natively compiled stored procedure. Remember natively compiled stored procedures can only reference In-Memory OLTP tables.

As you can see there are a number of different reports that will help you determine which tables and stored procedures you should convert to utilize the performance gains of In-Memory OLTP tables.

Resources

For additional resources related to the AMR tool you can check out the following link:

AMR tool: http://msdn.microsoft.com/en-us/library/dn205133(v=sql.120).asp

SQL Blog about New AMR Tool: http://blogs.technet.com/b/dataplatforminsider/archive/2013/09/17/new-amr-tool-simplifying-the-migration-to-in-memory-oltp.aspx

Candidate Tables and Stored Procedures

By using the four quadrant chart that the AMR tool displays you can quickly determine how easy it will be to migrate reported tables to In-Memory OLTP storage. As you move forward with installing SQL Server 2014 in your environment you should consider capturing a workload against your environment and then running that workload through the AMR tool. By doing this, the AMR tool will help identify your candidate tables for migration to In-Memory OLTP tables and which stored procedures might improve performance if they are converted to natively compiled stored procedures.