In the Web 2.0 world where smart phone and Web applications are competing to

access terabytes of data, there is a new imperative for speeding up retrieval

times as much as the technology permits. Rob Gravelle lists a few coding

techniques that we as database administrators and developers can use to whittle

down unwieldy results sets into manageable chunks.

In the Web 2.0 world where smart phone and Web applications are competing to

access terabytes of data, there is a new imperative for speeding up retrieval

times as much as the technology permits. Other than the obvious solution of

beefing up hardware and conduction materials, there are software improvements

that can have a measurable impact. For instance, in the front end, searches can

be tailored to suit the user’s exact requirements, while on the data side,

mechanisms can be put in place to send large data sets in chunks or even limit

what’s returned by using some sort of algorithm for ascertaining relevance of

the results. We’ve seen all of this done in the most successful search engines,

such as Google and Bing, to name but a few.

There is also the user-driven impetus to help them narrow down their results

to workable numbers. Speaking as a developer of large-scale systems, there are

many reasons why large result sets are just a bad idea. I can only imagine how

an analyst must feel having to sift through thousands of rows of data! There is

also the issue of load that so much data places on infrastructure and

degradation of application performance. All these things contribute to our

obligation to always be mindful of result set sizes.

Today’s article will list a few coding techniques that we as database

administrators and developers can use to whittle down unwieldy results sets

into manageable chunks.

Start with the Big Picture

You have probably heard of the LIMIT clause before. It’s what usually comes

to mind when talking about constraining the number of rows returned by SELECT

statements. GROUP BY and HAVING can also be used to cut down the number of

rows, albeit they work in a very different way. Whereas LIMIT returns the first

N number of rows of a record set, GROUP BY and HAVING can be utilized to show

high level information, which may be more representative of the underlying

data.

One of the more useful capabilities of the reports that I built for clients

was something called the drilldown feature. When the user clicked on a value,

it would then generate a second report, which contained details pertinent to

that particular row:

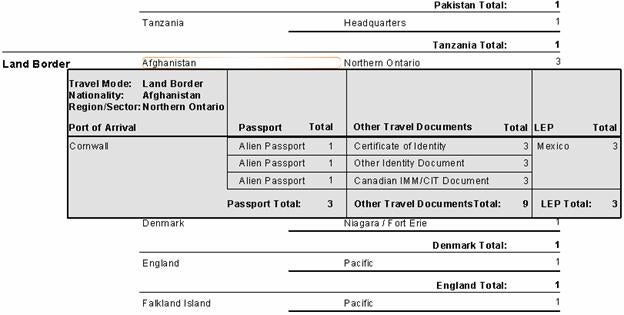

At a glance, the reader can see that there were three arrivals from

Afganistan in Northern Ontario during the reporting period. To see the details

of the arrivals they simply click on the row. In the drilldown report, the

exact Port of Arrival is listed along with document types, the Last Embarkation

Point (LEP), and count breakdowns.

The first query might use the GROUP BY clause to display high-level

information as follows:

SELECT ca.busines_line_id, ca.business_line, cl.citizenship_country, rs.region, count(cl.citizenship_country) AS cit_count FROM case ca Left Join client cl on ca.client_id = cl.client_id Left Join reporting_site rs on ca.reporting_site_cd = rs.reporting_site_cd GROUP BY rs.region;

The drilldown query

would use the record identifier to fetch the details for that row:

SELECT ca.port_of_arrival, IF(d.doc_type = 'passport', d.doc_sub_type,'') AS passport_type count( IF(d.doc_type = 'passport', d.doc_sub_type,0) ) AS passport_type_count, IF(d.doc_type <> 'passport', d.doc_sub_type,'') AS other_travel_document, count(IF(d.doc_type <> 'passport', d.doc_sub_type,0) ) AS other_document_count, r.last_embarkation_point FROM case ca, Left Join client cl on ca.client_id = cl.client_id Right Join routing on ca.case_id = r.case_id Right Join documents d on ca.case_id = d.case_id GROUP BY d.doc_sub_type HAVING ca.busines_line_id = @busines_line_id, cl.citizenship_country_id = @citizenship_country_id, rs.region = @region;

Note that the above code is highly simplified. For instance, mixing

non-grouped fields with aggregated ones requires an intermediary query as

explained in the Eliminating

Duplicate Rows From MySQL Result Sets article.

Top N Records

When used in combination with aggregate functions such as Count(), Min(),

Max(), and Sum(), the GROUP BY clause will apply the function to each group in

the GROUP BY field list. While that makes it easy to get statistics that are

broken down by group, the downside is that there is no easy way to display

non-aggregated data for other records within each group. For example, the

following SELECT statement would retrieve the highest salaries for each

department:

SELECT dept_id, max(salary) as max_salary FROM employees GROUP BY dept_id;

It would return something like the following:

dept_id max_salary 1 5100 2 5200 3 5600 4 5600

Unfortunately, aggregated functions will do nothing to help us retrieve the

top N salaries for each department. For that, we need to use a more

sophisticated query. There are many website posts dedicated to this issue,

which is commonly known as the "Top N Records per Group" problem.

Here’s one that I used to modify the above query to retrieve the top two

earners per department:

SET @c:=0; SET @a:=0; SELECT dept_id, name, gender, salary FROM (SELECT dept_id, name, gender, salary, IF(@c=dept_id, @a:=@a+1, @a:=1) AS rank, @c:=dept_id FROM employees ORDER BY dept_id, salary DESC) AS emp1 GROUP BY dept_id, rank HAVING rank <= 2;

This solution relies on variables to keep track of the departments and the

rank for each dept_id; each is stored in the calculated rank and @c columns

respectively. The dept_id is compared to the stored value so that we can count

each row within the current group. Every time the dept_id changes, our @a rank

variable is reset to 1. For this approach to work, we have to first sort the

records by the dept_id and salary. It’s also important that the latter be

sorted in descending order because we want the highest salaries ranked first.

The outer SELECT then groups by the dept_id as we did before, but this time,

the rank is added to the GROUP BY field list so that we can filter out those

that have a rank greater than 2.

Take a look at the following table and compare it to the results. You should

only see the two employees with the highest salaries for each department:

The employees Table

|

id |

dept_id |

gender |

name |

salary |

emp_id_number |

|

1 |

2 |

m |

Jon Simpson |

4500 |

1234 |

|

2 |

4 |

f |

Barbara Breitenmoser |

(NULL) |

9999 |

|

3 |

3 |

f |

Kirsten Ruegg |

5600 |

3462 |

|

4 |

1 |

m |

Ralph Teller |

5100 |

6543 |

|

5 |

2 |

m |

Peter Jonson |

5200 |

9747 |

|

6 |

2 |

m |

Allan Smithie |

4900 |

6853 |

|

7 |

4 |

f |

Mary Parker |

5600 |

2223 |

|

8 |

3 |

f |

Kirsten Ruegg |

4400 |

2765 |

The result set

|

dept_id |

name |

gender |

salary |

|

1 |

Ralph Teller |

m |

5100 |

|

2 |

Peter Jonson |

m |

5200 |

|

2 |

Allan Smithie |

m |

4900 |

|

3 |

Kirsten Ruegg |

f |

5600 |

|

3 |

Kirsten Ruegg |

f |

4400 |

|

4 |

Mary Parker |

f |

5600 |

|

4 |

Barbara Breitenmoser |

f |

(NULL) |

Note that we aren’t filtering out NULLs so an unknown salary still counts!

Pagination with Limit

There is a two-argument form of LIMIT, which can be combined with ORDER BY

for iterating through successive sections of a result set. For example, in Web

applications, it’s common to display the result of a large search across a

series of pages that each present one section of the results. Breaking down a

result set amongst many Web pages is known as pagination. The first number

parameter designates the number of rows to skip. The second one is how many

records to display. The following statements would display successive results

of 20 rows:

SELECT * FROM t ORDER BY id LIMIT 0, 20; SELECT * FROM t ORDER BY id LIMIT 20, 20; SELECT * FROM t ORDER BY id LIMIT 40, 20; SELECT * FROM t ORDER BY id LIMIT 60, 20;

Conclusion

Today we looked at a few coding techniques from the perspective of database

administrator and/or developer that can be used to whittle down unwieldy

results sets into manageable chunks. As always, don’t just assume that a

technique will improve performance. Depending on the complexity of the query,

returning more rows may yield superior performance from the database. For that

reason, it’s important to consider other components such as the Web/application

servers and application code. We recently identified several application

processes, which were hitting the database a lot more often than was necessary.

A bit of code tweaking resulted in a markedly improved query turnaround time.