Brief intro

Network cards are getting faster, internet connections are increasingly becoming faster. There are even talks of 1Gbps internet and faster coming available. Oracle RAC can benefit from such developments, but did you know that you could stretch your RAC geographically (well almost) if you wanted to? Well as it turns out, you can! You can already spread your nodes across the city. There are cases of several clients running the extended RAC up to 50 kms!

Before designing, ask yourself why do you want to push this far and why an extended RAC? The answer is pretty simple. There are enough log shipping solutions but they only end up making the RAC environment complex. A second benefit is that you won’t need to worry about the danger of your data being lost in a building fire.

Designing an extended RAC

Let’s take a simple example. We have some of our production and test servers in one building, say Site A, and most of our production servers have been hosted at another building, Site B (a few kilometers away). The cool part here is that everything is connected with FC (Fiber channel). We literally made all of our servers connect to the SAN (which happens to be at the other hosted site). Following is a list of things you should pay attention to for this scenario.

- Keep everything redundant! Public NIC bonding, High Speed Interconnect NIC bonding, dual HBAs (for your SAN)

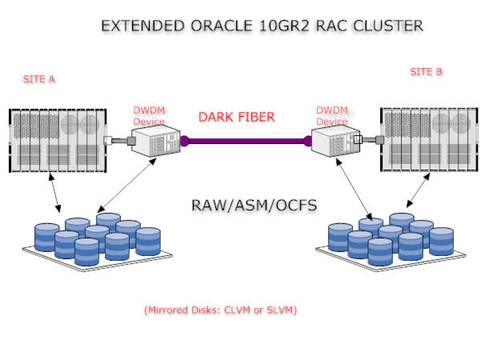

- Fiber selection: If you are planning longer distances, try to keep dark fibers in consideration, (like Google does).

- Disk Mirroring (Host Based Mirroring CLVM or SLVM, Remote Array Based Mirroring etc)

Mission critical applications running on RAC are usually redundant. Having Dual connections keeps you online. Selecting the Fiber can be an expensive operation if you go for the Dark Fiber. Read this PDF for the FAQs.

How it’s done?

DWDM or Dense Wavelength Division Multiplexing devices need to be place at each geographic end of the nodes, both connected via the dark fiber. So what exactly is WDM? Quoting wikipedia:

…fibre-optic communications, wavelength-division multiplexing (WDM) is a technology, which multiplexes multiple optical carrier signals on a single optical fibre by using different wavelengths (colours) of laser light to carry different signals. This allows for a multiplication in capacity, in addition to making it possible to perform bidirectional communications over one strand of fibre. “The true potential of optical fibre is fully exploited when multiple beams of light at different frequencies are transmitted on the same fibre. This is a form of frequency division multiplexing (FDM) but is commonly called wavelength division multiplexing.”The term wavelength-division multiplexing is commonly applied to an optical carrier (which is typically described by its wavelength), whereas frequency-division multiplexing typically applies to a radio carrier (which is more often described by frequency). However, since wavelength and frequency are inversely proportional, and since radio and light are both forms of electromagnetic radiation, the two terms are closely analogous.

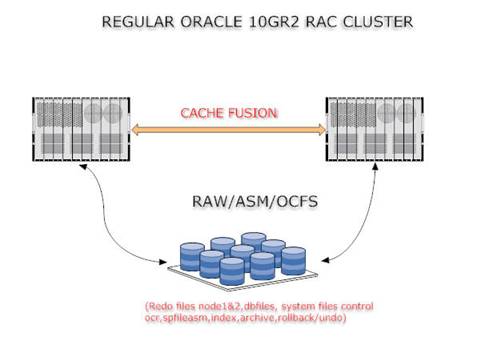

Looking At a typical RAC installation:

An Extended RAC would look like this:

The above picture is of a typical RAC, which we have built in the installation series several times. While extended RAC involves factors such as mirrored disks, dark fiber, DWDM device and obviously the distance, there is also a typical array based mirroring (which I haven’t mentioned here) where all of the I/O is sent to one node/site and is mirrored with the other site.

With Oracle 10g Release 2 and onwards, a typical Primary/Primary RAC is the desired solution, but having a 3rd party solution such as Norton. etc. helps elevate the high availability should the two sites fail to connect to each other due to connectivity problems. Obviously, you have several solutions, such as HPSG (Service Guard), Sun Cluster, IBM HACMP or Veritas, but building a typical Oracle stack offers several advantages. Oracle’s support for Linux (with its latest commitment to OS support as well) and the number of nodes (maximum 100 in 10g R2!) makes it an obvious choice to invest in Oracle’s clusterware. So in a typical scenario you could choose to put your OCR, spfileasm, votingdisk on a third site. This will ensure that your extended RAC is up all the time. About the LVMs, current ASM has some limitations that may affect the performance (like full resilvering of the ASM volumes is required should there be a network hiccup and ASM can’t see any volumes, local disks are not read by the ASM as ‘default’) but Oracle’s commitment to RAC does have a lot to offer. Future releases, with increasing bandwidths and reduced prices of the DWDM will help you as a client to make better choices.

Moreover, there is Oracle’s own DataGuard. Fortunately, you can also use regular networks to perform regular maintenance such as upgrades etc. We will try to cover the Streams and DataGuard in upcoming articles.

Conclusion

The Extended RAC solution over DWDM has been tested extensively by both Oracle and HP. The notable and expected scenario is to observable degradation of performance as the distances increase. Over a typical 25 km distance, typical tests conducted by HP (on IP and IPC) showed a considerable degradation of 8% and application degradation fell by 10%. With 50 km it further decreased but we should not forget that the increasing innovation (both on the application front and on the bandwidths) are making all that easier. In addition, one should never forget to understand and tune their application on all levels, (and on all levels: the hardware, disks, I/O, local performance). I have mentioned a globally deployable RAC several times. Someday (not hereto far off) we will also have typical production RAC Appliances on the Virtualized Grid. The face of IT is changing dramatically and soon we will have an extended RAC as a pervasive phenomenon.