Introduction

Organizations have been focusing more and investing heavily in Analytics, to gain the knowledge required to make improvements or changes to enhance business. Analytics help organizations to remain competitive, optimize cost and resources by helping them study their past data (e.g. internal operational data and external data like social media, data from research or government organizations) to identify potential trends, help them in analyzing the effects of certain decisions or events (e.g. Ad campaign, offers on their products and services), and analyzing past/current data to predict outcomes (e.g. credit scoring).

Though Analytics have been in use for several years, there have been a few challenges. For example, an organization needed to have a separate Analytics system so that they didn’t impact their existing operational or transactional systems. Having a separate Analytics system not only involves additional cost (for its hardware, software licenses, development, operation, etc.) but also adds to data latency. Often times data latency is in days or weeks.

SQL Server 2016 introduces a Real-time Operational Analytics feature, which allows you to run both transactional/operational and analytics workloads on the same system side by side – without having a need for another analytics system for reporting. In this article series, I am going to delve deeply into this new feature and will demonstrate how to get started with it.

What is Real-time Operational Analytics

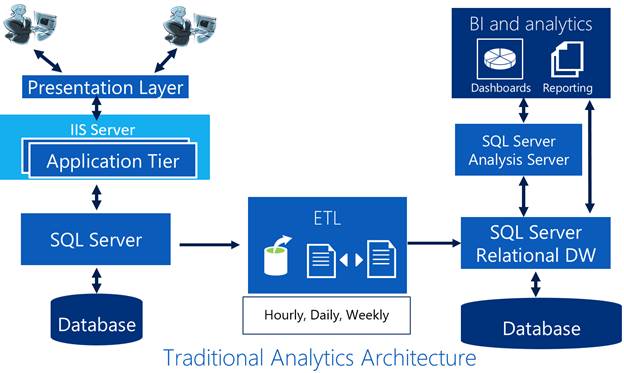

If you look at the current database systems, you see a clear separation of the operational (i.e. OLTP – OnLine Transaction Processing) and analytics (Data Warehouse or Data Marts – analytics data is usually stored in a data warehouse or data mart dedicated to running analytics queries) stores and you’ll find separate systems for operational and analytics workloads. Operational systems like websites and applications work off the hot data in the operational store while you perform reporting and analytics on the analytics store (most of the time on the cold data). You needed to implement and run Extract, Transform, and Load (ETL) jobs regularly to move the data from the operational store to an analytics store. While this solution has been the standard for decades, it has these three key challenges:

- Cost – Implementing a separate system for analytics requires you to spend on hardware and software licenses, development efforts required for modelling and implementation of ETL and additional operational overhead in maintaining a separate system.

- Complexity – Implementing ETL can be very complex, especially since you need to identify modified rows to migrate and then load that data into the analytics store. Typically, development of ETL can consume up to 70% overall analytics solution development time. Also, you need to ensure while moving data to analytics store, there is no or minimum impact on working of the operational system.

- Data Latency – Having a separate analytics store has an inherent data latency for the analytics workload, i.e. delay to get insight. The data latency will depend upon how often ETL is run. For example, if you run the ETL job every four hours, the analytics will be at least four hours old, likewise if you run the ETL job at end of the business day, the analytics will be at least one day old. At best, data for analytics is as current as the last successful ETL job execution. For many businesses, this data latency in analytics is unacceptable. They require analytics to be done on live or real-time data. For example, fraud-detection is one such application that can leverage real-time analytics on operational data.

Now imagine if we could combine both the operational store and analytics store into a single system to accomplish both the objectives of fast/efficient transaction processing as well as the ability to run reports and analytical queries in real-time on the live data itself.

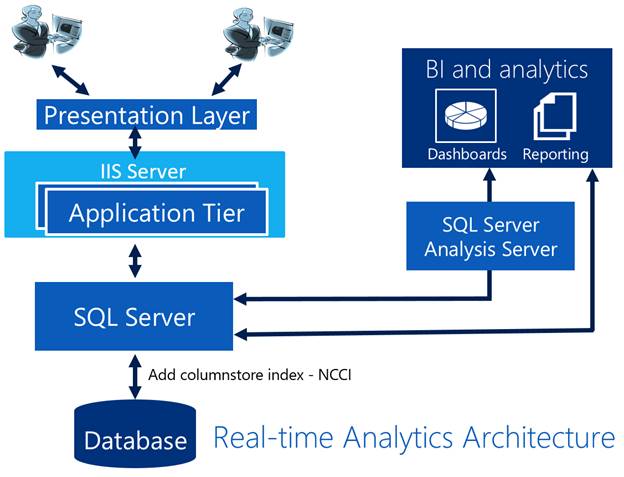

That is exactly the scenario SQL Server 2016 Real-time Operational Analytics targets. SQL Server 2016 introduces Real-time Operational Analytics, the ability to run both operational/transactional and analytics workloads on the same database tables at the same time. Besides running analytics in real-time, you can also eliminate the need for ETL and a separate data warehouse. Real-Time Operational Analytics enables running analytics queries directly on your operational workload using enhanced Columnstore indexes thereby eliminating any data latency required earlier for moving data across different systems.

Looking Back – Analytics so Far

As you can see in the figure below for traditional analytics architecture, there is a separate database for storing data needed for analytics/reporting. There is an ETL pipeline which moves necessary data from operational store (or OLTP store) to analytics store (Data Mart or Data Warehouse dedicated for running analytics queries) on a defined time interval. The Presentation or Visualization layer either directly connects to the Data Warehouse or connects via SQL Server Analysis Services. Use of SQL Server Analysis Services in between the relational Data Warehouse and Visualization layer provides several advantages like multi-dimensional modeling, improved performance because of pre-aggregated data, in memory processing, enhanced security, etc.

Even with issues as discussed earlier in this architecture, it has been in use for several years but now organizations demand real-time analytics so that they are quick to respond to certain events.

Real-time Operational Analytics – How it Works

SQL Server 2012 introduced Columnstore index (non-updateable, Non-Clustered Columnstore Index(NCCI)) which was enhanced in SQL Server 2014 to have updateable Clustered Columnstore Index(CCI). SQL Server 2016 takes it to a new level and allows a fully updateable non-clustered Columnstore index on a rowstore table.

The Columnstore index maintains a copy of the data, so the operational and analytics workloads run against separate copies of the data within the same database. This minimizes the performance impact of both workloads running at the same time. SQL Server automatically maintains index changes so operational changes are always up-to-date for analytics. With this design, it is possible and practical to run analytics queries in real-time on up-to-date data. This works for both traditional disk-based and memory-optimized tables. The figure below shows one possible configuration using Analysis Services in Direct Query mode, but you can use any visualization tool or custom solution at your disposal to either connect directly or connect via Analysis Services. This solution addresses the drawbacks mentioned above as the analysis happens directly on the operational data (no ETL, no data latency and no separate data warehouse – which means cost and complexity are greatly reduced).

Conclusion

SQL Server 2016 introduces Real-time Operational Analytics, the ability to run both operational/transactional and analytics workloads on the same database tables at the same time.

In this article we looked at traditional analytics architecture, challenges it faces, and how the newly introduced Real-time Operational Analytics feature overcomes those challenges. In the next article of the series, we will look into different design considerations for leveraging the Real-time Operational Analytics feature, we’ll look into different scenarios where it fits or where it does not and will look at how to get started with it.

Resources

Get started with Columnstore for real time operational analytics

Understanding New Column Store Index of SQL Server 2012